The EdgeTPU is a small ASIC that provides high-performance machine learning (ML) inferencing for low-power devices. It is able to provide real-time image classification or object detection performance while simultaneously achieving accuracies typically seen only when running much larger, compute-heavy models in data centers. In this article, we provide an overview of the EdgeTPU and our web-based retraining system that allows users with limited machine learning and AI expertise to build high-quality models for on Ohmni.

CPU vs GPU vs TPU

The CPU is a general-purpose processor based on the von Neumann architecture. The main advantage of a CPU is its flexibility. With its Von Neumann architecture, we can load any kind of software for millions of different applications. However, because the CPU is so flexible, the hardware doesn’t always know what the next calculation will be until it reads the next instruction from the software.

Therefore, a CPU has to store all calculation results on the memory inside the CPU for every single calculation. This mechanism is the main bottleneck of CPU architecture. For example, the huge amount of calculations of a deep learning model are completely predictable, but each CPU’s Arithmetic Logic Units (ALUs) executes them one by one, accessing the memory every time, limiting the total throughput and consuming significant energy.

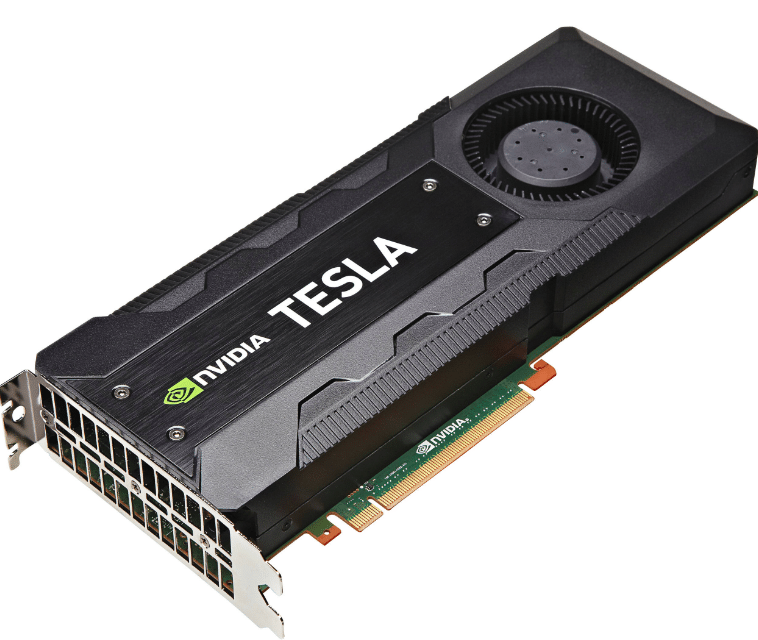

Meanwhile, the GPU (Figure 1) architecture is designed for applications with massive parallelism, such as matrix calculations in deep learning models. This is because it uses thousands of ALUs in a processor to gain higher throughput than CPU architecture. The modern GPU typically has 2,500–5,000 ALUs in a single processor which means you can execute thousands of computations simultaneously.

However, the GPU is still a general-purpose processor that has to support a wide range of applications. For every single calculation in the thousands of ALUs, the GPU needs to access registers or shared memory to read and store the intermediate calculation results. Because the GPU performs more parallel calculations on its thousands of ALUs, it also spends proportionally more energy accessing memory and also increases the footprint of the GPU for complex wiring.

Figure 1: NVIDIA Tesla K40 GPU Accelerator

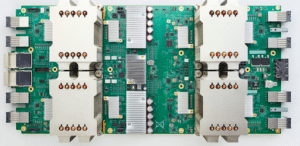

An alternative to the general-purpose processor is the TPU, illustrated in Figure 2. It was designed by Google with the aim of building a domain-specific architecture. In particular, the TPU is specialized for matrix calculations in deep learning models by using the systolic array architecture. Because the primary task for this processor is matrix processing, hardware designers of the TPU know every calculation to perform that operation. They can place thousands of multipliers and adders and to connect them directly to form a large physical matrix of those operators.

Therefore, during the whole process of massive calculations and data passing, no memory access is required at all. For this reason, the TPU can achieve high computational throughput on deep learning calculations with much less power consumption and a smaller footprint.

Figure 2: Google Cloud TPU

Cloud vs Edge

The EdgeTPU runs on the edge, but what is the edge and why wouldn’t we want to run everything in the cloud?

Running code in the cloud means that we can use CPUs, GPUs, and TPUs from services provided by Amazon Web Services or Google Cloud Platform. The main benefit of running code in the cloud is that we can assign the necessary amount of computing power for that specific code. Training large models can take a lot of computation so it’s important to have flexibility.

In contrast, running code on the edge means that code will be on-premise. In this case, users can physically touch the device on which the code runs. The primary benefit of this approach is that there is no network latency. This lack of latency is great for IoT and robotics-based solutions that generate a large amount of data.

Retraining System for EdgeTPU Models

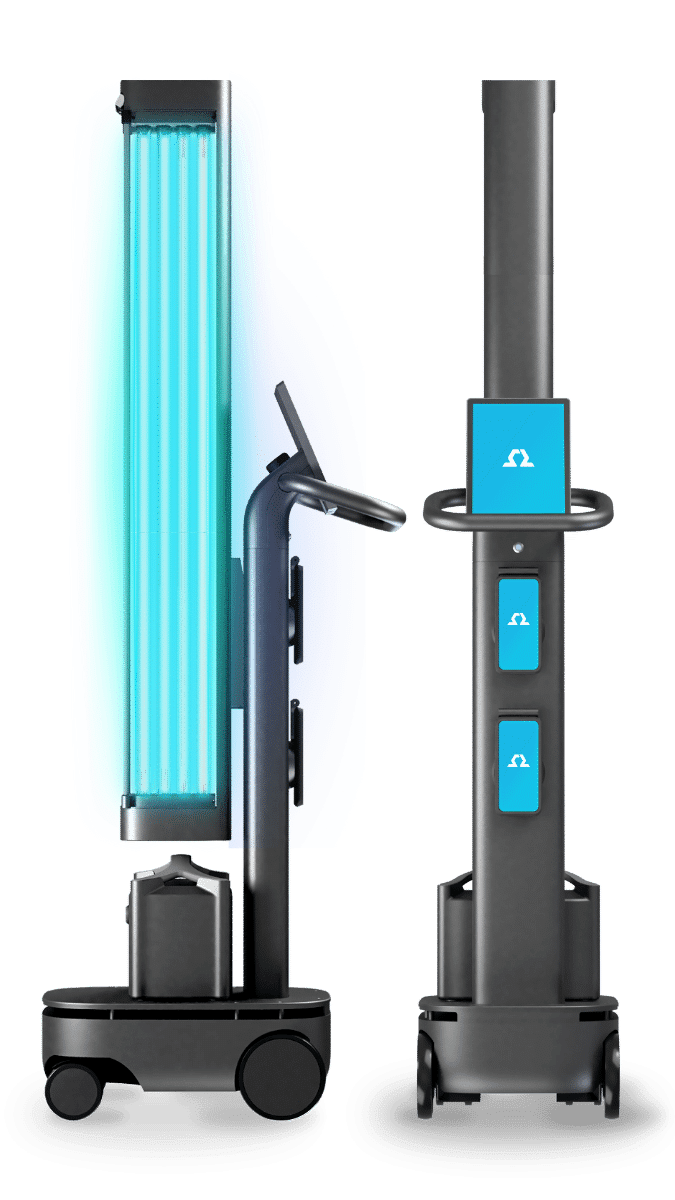

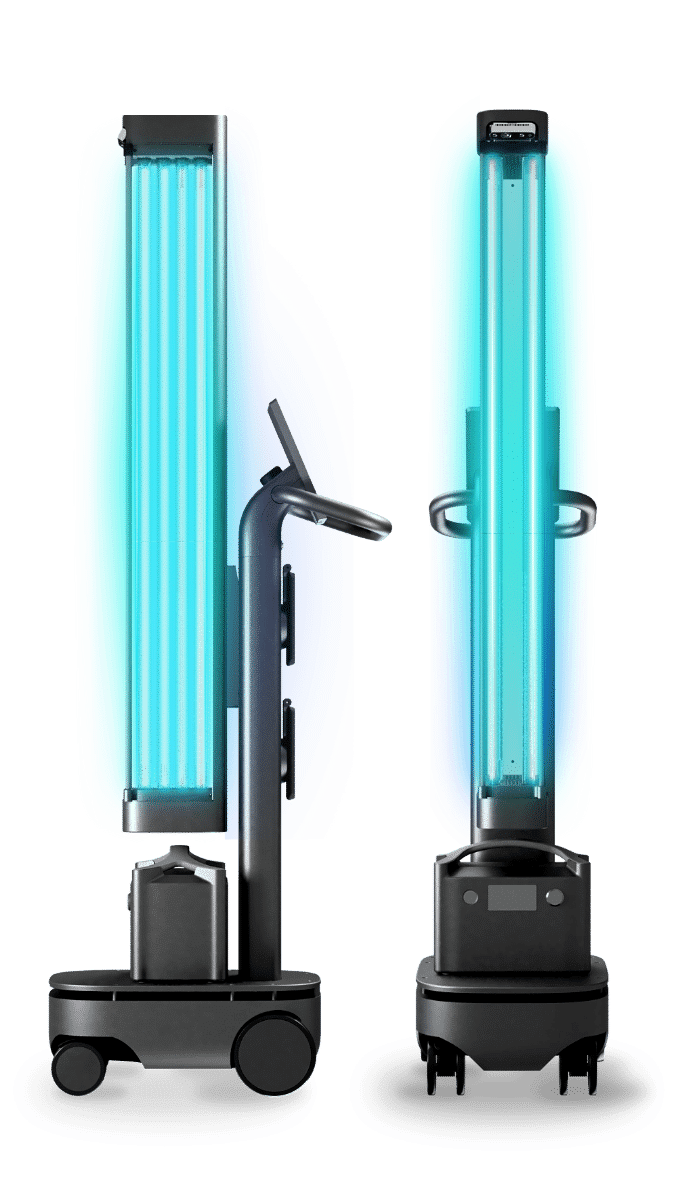

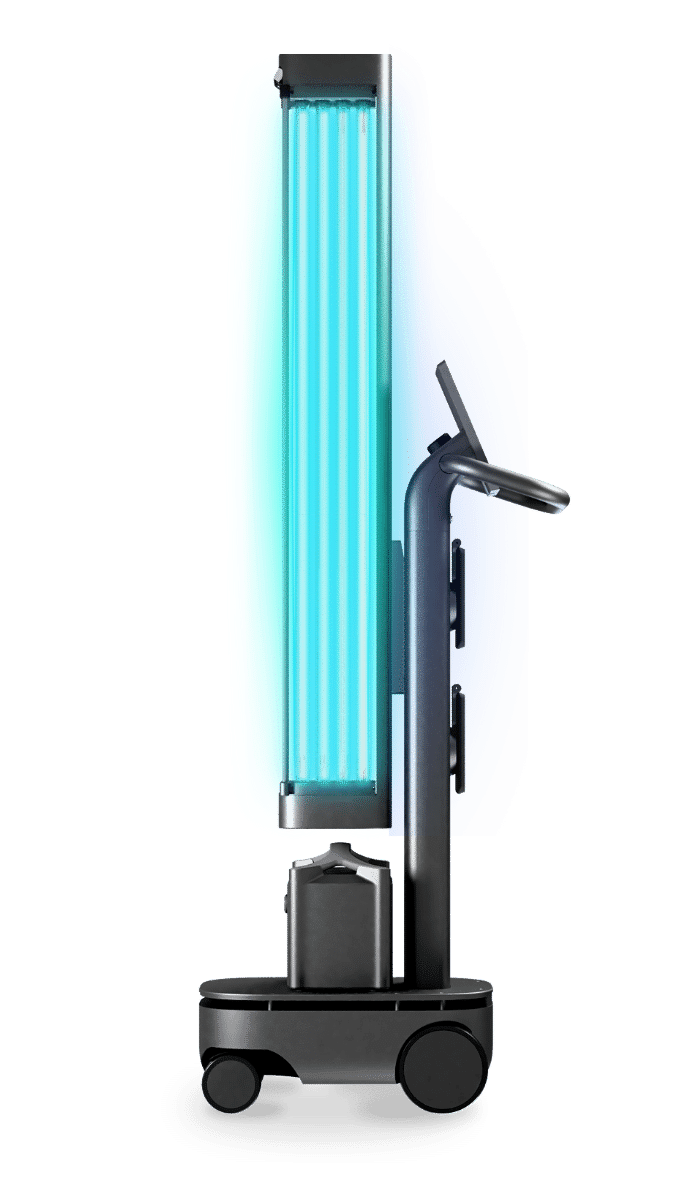

Figure 3: Coral USB

Ohmni can support the Coral USB Accelerator (Figure 3) so that users can build their awesome AI applications using this device. The Coral USB Accelerator is an accessory that adds the EdgeTPU as a coprocessor to Ohmni. Users of Ohmni Developer Edition can request this additional hardware customization and then train their own deep learning models that support EdgeTPU to deploy to Ohmni.

However, training the models requires advanced knowledge of machine learning and AI. To facilitate the users in building AI applications on Ohmni, we developed a web-based retraining system for EdgeTPU models, which can support the users in training high-quality deep learning models with minimal effort and machine learning expertise.

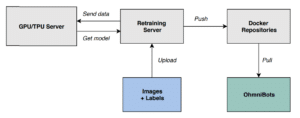

Figure 4: An overview of the retraining system for EdgeTPU models

Figure 4 depicts an overview of our retraining system. To create a custom model for EdgeTPU, users upload their training images and the corresponding annotations to our retraining server via a web browser.

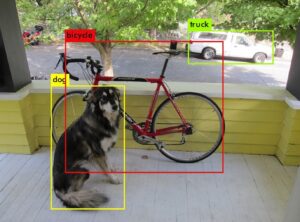

For example, to train an object detection model, the training data consists of a set of images and a set of annotation files that contain the coordinates of a bounding box for all objects in the images (Figure 5). The larger the amount of training data, the better the performance of the resulting model.

Figure 5: An example of training data for an object detection model

Next, our retraining server conducts several pre-processing steps on the raw data from the users and then uploads that data to our high-performance servers using the GPU/TPU for training model. After a while, the retraining server gets the trained model, converts it into the EdgeTPU format, and builds a docker image that supports serving the trained EdgeTPU model on Ohmni.

Finally, users can pull the docker image onto their Ohmni Developer Edition customized with Coral USB accelerators and build their application using the trained EdgeTPU models.

This novel web-based retraining system alongside Ohmni with EdgeTPU support will be available for beta testing on Ohmni Developer Edition later this year. If you are interested in learning more about this feature, please contact us.

Summing Up EdgeTPU

This article provides an introduction of EdgeTPU and demonstrates high performance AI inferencing on Ohmni. It also introduces readers to our web-based retraining system for EdgeTPU models to facilitate the users building AI applications on Ohmni. This system will be one of our new cloud services.

To learn more about Ohmni Developer Edition, click here. If you’re ready to purchase your own Ohmni, visit our store: https://store.ohmnilabs.com/products/ohmni-developers-kit.

Written by Ba-Hien Tran