Deep learning is a powerful tool when applied to robotics. This article discusses how OhmniLabs uses deep learning for autodocking calibration in its telepresence robot.

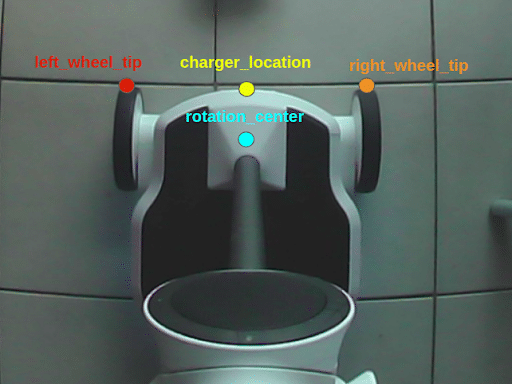

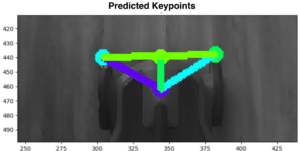

The Ohmni robot has the capability of finding its docking station and moving to that station for recharging. Calibration between the camera image and the robot coordinates in the real world is an important step of autodocking. In order to function properly, we first have to find some keypoints of the robot base from the camera image. According to these points, we find the transformation from the camera image to the robot coordinates. For the Ohmni robots, we use 4 keypoints as depicted in the below figure.

Figure 1: The 4 keypoints used to calibrate between the camera image and robot coordinates.

Previously, the keypoints were chosen manually. This was not only time-consuming but also might cause pure calibration if the neck was tilted or lens type was changed. To offset this issue, rather than save all the coordinates of the keypoints ahead of time, we use an image-based algorithm that can help the robot detect the exact keypoints from the camera image and then dock accordingly.

Deep Learning-based Keypoints Detection Model

Most image-based methods often extract low-level visual features from keypoints or regions. Such low-level feature representations usually suffer from a lack of semantic interpretation, which means they cannot capture the high-level category appearance. To improve robustness, we can integrate external constraints such as CAD models or robotic kinematics but the image-driven approach is still central to provide robust and generalizable systems.

Deep learning has emerged as the method of choice for AI tasks such as computer vision, speech and natural language processing, etc. Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction. By using deep learning models, we can avoid using the features that are designed by human engineers; instead, the models learn automatically from the raw data.

In order to take advantage of this approach, we extend the OpenPose model, which is an efficient method originally for multi-person pose estimation that uses Part Affinity Fields. The model is comprised of a set of deep neural networks that are in charge of jointly learning image features and localizing the keypoints in the image. The architecture of the model is depicted in Figure 2. To take advantage of GPU/TPU parallel computing and serving of trained models, we implement the model in TensorFlow.

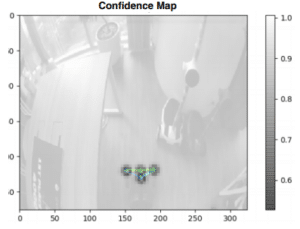

The model first extracts features from an input image using a pre-trained convolutional neural network. Then the image features are fed into two parallel branches of other convolution layers. The first branch predicts a set of confidence maps which are a matrix that stores the confidence the network has that a certain pixel contains a certain keypoint. Figure 3 shows an example of the confidence map of keypoints.

Figure 2: An example of the confidence map of keypoints

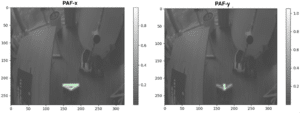

Figure 3: An example of the PAF in the horizontal direction (left) and in the vertical direction

The second branch predicts a set of Part Affinity Fields (PAFs) which represents the degree of association between keypoints. PAFs are matrices that give information about the position and orientation of pairs. They come in couples: for each keypoint we have a PAF in the horizontal direction and a PAF in the vertical direction as illustrated in Figure 4. Successive stages are used to refine the predictions made by each branch.

Using the part confidence maps, bipartite graphs are formed between pairs of parts. Using the PAF values, weaker links in the bipartite graphs are pruned. Through the above steps, the keypoints and the skeleton of the robot base can be estimated correctly. Figure 5 illustrates an example of the estimated keypoints and the connections between the keypoints.

Figure 4: An example of the estimated keypoints and the connections between the keypoints

Normally, training a deep learning model requires a large amount of data. We collected thousands of images under various conditions so that the trained model would be extremely robust. Specifically, we use many types of robot to capture images in different environments such as floors, overall illuminations, camera types and camera tilt angle.

Moreover, we employed data augmentation techniques to increase the size of the dataset and to help the model generalize better. After a series of experiments, our final model obtains impressive results with an accuracy of approximately 98% in terms of the mAP score evaluated on the test data. Notice that we can continuously improve the model over time; the larger the amount of training data, the better the performance of the model.

Model Training and Serving Architecture

Creating deep learning models is only one part of the problem. The next challenge is to find a way to serve the models in production. The model serving system should be subjected to a large volume of traffic. It is important for us to ensure that the software and hardware infrastructure serving these models is scalable, reliable and fault-tolerant.

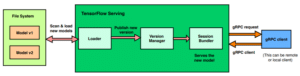

We decided to use TensorFlow Serving for model serving. Tensorflow Serving is written in C++, which supports serving of machine learning models. TensorFlow Serving treats each model as a servable object. It periodically scans the local file system, loading and unloading models based on the state of the file system and the model versioning policy. This allows trained models to be easily hot-deployed by copying the exported models to the specified file path while Tensorflow serving continues running. TensorFlow Serving comes with a reference front-end implementation based on gRPC, a high performance, open-source RPC framework from Google.

Figure 5: An overview of the architecture of TensorFlow Serving

First, we train the TensorFlow models with cloud GPU instances. Once trained and validated, they are exported and published to our model repository. Next, we developed a model serving network (MSN) that implements TensorFlow Serving. The MSN manages the job queues, pre-processing of images and post-processing of TensorFlow Serving predictions. It also load balances requests from Ohmni robot and manages the updating of models from the repository. We have generalized this model training and serving architecture to serve other models and data as part of OhmniLabs’ deep learning AI framework.

Conclusion on Deep Learning for Autodocking Calibration

Our results demonstrate the power of deep learning for autodocking calibration. We find that our model can tackle the problem of camera calibration efficiently. Furthermore, we also built an infrastructure for model training and serving that allows for continuous improvement of the deep learning models. This architecture is deployed as part of OhmniLabs’ deep learning AI framework.

To learn more about Ohmni Developer Edition, please visit: https://store.ohmnilabs.com/products/ohmni-developers-kit.

Written by Ba-Hien Tran